That is half 2 of a two-part collection sharing Op-Eds I wrote for my Legislation 432.D course titled “Accountable Pc Techniques.” This weblog will doubtless go up on the course web site within the close to future however as I’m hoping to talk to and reference issues I’ve written for a displays developing, I’m sharing right here, first. This weblog discusses the recent subject of ‘people within the loop’ for automated decision-making programs [ADM]. As you will notice from this Op-Ed, I’m fairly important of our present Canadian Authorities self-regulatory regime’s remedy of this idea.

As a aspect word, there’s a implausible new useful resource known as TAG (Monitoring Automated Authorities) that I might counsel these researching this area add to their bookmarks. I discovered it on X/Twitter by Professor Jennifer Raso’s submit. For these which might be additionally extra new to the area or coming from it by immigraiton, Jennifer Raso’s analysis on automated decision-making, notably within the context of administrative legislation and frontline decision-makers is outstanding. We’re leaning on her analysis as we develop our personal work within the immigration area.

With out additional ado, right here is the Op-Ed.

Who’re the people concerned in hybrid automated decision-making (“ADM”)? Are they positioned into the system (or loop) to offer justification for the machine’s selections? Are they there to imagine authorized legal responsibility? Or are they merely there to make sure people nonetheless have a job to do?

Successfully regulating hybrid ADM programs requires an understanding of the varied roles performed by the people within the loop and readability as to the policymaker’s intentions when putting them there. That is the argument made by Rebecca Crootof et al. of their article, “People within the Loop” not too long ago printed within the Vanderbilt Legislation Evaluate.[ii]

On this Op-Ed, I focus on the 9 roles that people play in hybrid decision-making loops as recognized by Crootof et al. I then flip to my central focus, reviewing Canada’s Directive on Automated Determination-Making (“DADM”)[iii] for its dialogue of human intervention and people within the loop to counsel that Canada’s major Authorities self-regulatory AI governance device not solely falls brief, however helps an method of silence in direction of the position of people in Authorities ADMs.

What’s a Hybrid Determination-Making System? What’s a Human within the Loop?

A hybrid decision-making system is one the place machine and human actors work together to render a choice.[iv]

The oft-used regulatory definition of people within the loop is “a person who’s concerned in a single, explicit resolution made along with an algorithm.[v] Hybrid programs are purportedly differentiable from “human off the loop” programs, the place the processes are fully automated and people don’t have any capacity to intervene within the resolution.[vi]

Crootof et al. challenges the regulatory definition and understanding, labelling it as deceptive as its “deal with particular person decision-making obscures the position of people in all places in ADMs.”[vii] They counsel as a substitute that machines themselves can not exist or function unbiased from people and subsequently that regulators should take a broader definition and framework for what constitutes a system’s duties.[viii] Their definition concludes that every human within the loop, embedded in a company, constitutes a “human within the loop of complicated socio-technical programs for regulators to focus on.”[ix]

In discussing the legislation of the loop, Crootof et al. expresses the quite a few methods during which the legislation requires, encourages, discourages, and even prohibits people within the loop. [x]

Crootof et al. then labels the MABA-MABA (Males Are Higher At, Machines Are Higher At) entice,[xi] a typical policymaker place that erroneously assumes the most effective of each worlds within the division of roles between people and machines, with out consideration how they’ll additionally amplify one another’s weaknesses.[xii] Crootof et al. finds that the myopic MABA-MABA “obscures the bigger, extra vital regulatory query animating calls to retain human involvement in decision-making.”

As Crootof et al. summarizes:

“Specifically, what do we wish people within the loop to do? If we don’t know what the human is meant to do, it’s unattainable to evaluate whether or not a human is bettering a system’s efficiency or whether or not regulation has achieved its targets by including a human”[xiii]

Crootof et al.’s 9 Roles for People within the Loop and Suggestions for Policymakers

Crootof units out 9, non-exhaustive however illustrative roles for people within the loop. These roles are: (1) corrective; (2) resilience; (3) justificatory; (4) dignitary; (5) accountability; (6) Stand-In; (7) Friction; (8) Heat-Physique; and (9) Interface.[xiv] For ease of abstract, they’ve been briefly described in a desk connected as an appendix to this Op-Ed.

Crootof et al. discusses how these 9 roles usually are not mutually unique and certainly people can play lots of them on the identical time.[xv]

Considered one of Crootof et al.’s three major suggestions is that policymakers needs to be intentional and clear about what roles the people within the loop serve.[xvi] In one other suggestion they counsel that the context issues with respect to the position’s complexity, the goals of regulators, and the power to manage ADMs solely when these complicated roles are identified.[xvii]

Making use of this to the EU Synthetic Intelligence Act (because it then was[xviii]) [“EU AI Act”], Crootof et al. is important of how the Act separates the human roles of suppliers and customers, leaving no person chargeable for the human-machine system as a complete.[xix] Crootof et al. finally highlights a core problem of the EU AI Act and different legal guidelines – “confirm and validate that the human is carrying out the specified targets” particularly in gentle of the EU AI Act’s imprecise targets.

Having briefly summarized Crootof et al.’s place, the rest of this Op-Ed ties collectively a key Canadian regulatory framework, the DADM’s, silence round this query of the human position that Crootof et al. raises.

The Lacking People within the Loop within the Directive on Automated Determination-Making and Algorithmic Impression Evaluation Course of

Directive on Automated Determination-Making

Canada’s DADM and its companion device, the Algorithmic Impression Evaluation (“AIA”), are soft-law[xx] insurance policies geared toward making certain that “automated decision-making programs are deployed in a fashion that reduces dangers to purchasers, federal establishments and Canadian Society and results in extra environment friendly, correct, and interpretable resolution made pursuant to Canadian legislation.”[xxi]

One of many areas addressed in each the DADM and AIA is that of human intervention in Canadian Authorities ADMs. The DADM states:[xxii]

Guaranteeing human intervention

6.3.11

Guaranteeing that the automated resolution system permits for human intervention, when applicable, as prescribed in Appendix C.

6.3.12

Acquiring the suitable stage of approvals previous to the manufacturing of an automatic resolution system, as prescribed in Appendix C.

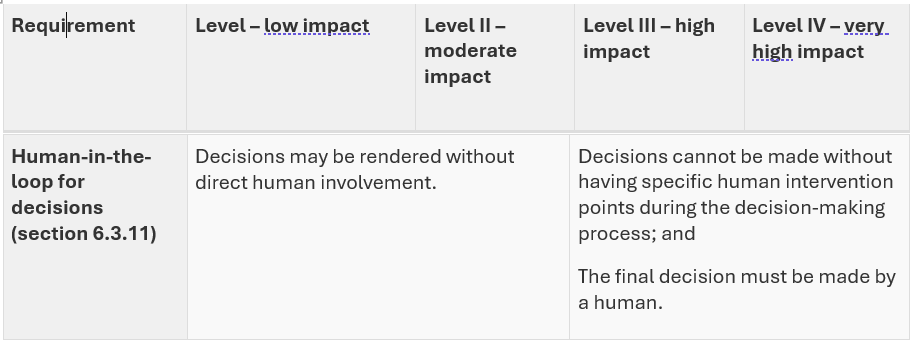

Per Appendix C of the DADM, the requirement for a human within the loop relies on the self-assessed influence stage scoring system to the AIA by the company itself. For stage 1 and a couple of (low and average influence)[xxiii] tasks, there isn’t a requirement for a human within the loop, not to mention any rationalization of the human intervention factors (see desk beneath extracted from the DADM).

I might argue that to keep away from explaining additional about human intervention, which might then interact explaining the position of the people in making the choice, it’s simpler for the company to self-assess (rating) a mission as one in all low to average influence. The AIA creates restricted boundaries nor a non-arms size overview mechanism to stop an company strategically self-scoring a mission beneath the excessive influence threshold.[xxiv]

Wanting on the printed AIAs themselves, this concern of the company with the ability to keep away from discussing the human within the loop seems to play out in apply.[xxv] Of the fifteen printed AIAs, fourteen of them are self-declared as average influence with just one declared as little-to-no influence. But, these AIAs are located in high-impact areas akin to psychological well being advantages, entry to info, and immigration.[xxvi] Every of the AIAs include the identical commonplace language terminology {that a} human within the loop is just not required.[xxvii]

Within the AIA for the Superior Analytics Triage of Abroad Short-term Resident Visa Functions, for instance, IRCC additional rationalizes that “All functions are topic to overview by an IRCC officer for admissibility and closing resolution on the applying.”[xxviii] This appears to have interaction {that a} human officer performs a corrective position, however this not explicitly spelled out. Certainly, it’s open to contestation from critics who see the Officer position as extra as a rubber-stamp (dignitary) position topic to the affect of automation bias.[xxix]

Suggestion: Requiring Policymakers to Disclose and Focus on the Position of the People within the Loop

Whereas I’ve basic considerations with the DADM itself missing any regulatory enamel, missing the enter of public stakeholders by a remark and due course of problem interval,[xxx] and pushed by effectivity pursuits,[xxxi] I’ll put aside these considerations for a tangible suggestion for the present DADM and AIA course of.[xxxii]

I might counsel that past the query round influence, in all instances of hybrid programs the place a human will probably be concerned in ADMs, there must be an in depth rationalization offered by the policymaker of what roles these people will play. Whereas I’m not naïve to the truth that policymakers is not going to proactively admit to participating a “heat physique” or “stand-in” human within the loop, it not less than begins a shared dialogue and places some onus on the policymaker to each take into account proving, but in addition disproving a selected position that it might be assigning.

The precise suggestion I’ve is to require as a part of an AIA, an in depth human capital/assets plan that requires the Authorities company to determine and clarify the roles of the people in your entire ADM lifecycle, from initiation to completion.

This concept additionally appears in keeping with finest practices in our key neighbouring jurisdiction, america. On 28 March 2024, a U.S. Presidential Memorandum geared toward Federal Businesses titled “Advancing Governance, Innovation, and Threat Administration for Company Use of Synthetic Intelligence geared toward U.S. Federal Businesses was launched. The Presidential Memorandum devotes vital consideration to defining and assigning particular roles for people within the AI governance construction.[xxxiii]

This stage of granularity and specificity is in sharp distinction and direct counterpoint to the dearth of debate of the roles of people discovered within the DADM and AIA.[xxxiv]

Conclusion: The DADM’s Flexibility Helps Incorporating Modifications

Finally, proactive disclosure and a extra fulsome dialogue of human roles will enhance transparency and an added layer of accountability for ADMs that regulators can maintain Authorities policymakers to, and at worst encourage them to proactively focus on.

Fortunately, and as a optimistic word to finish this Op-Ed, the DADM is just not a static doc. It undergoes common opinions each two-years.[xxxv]

The malleability of soppy legislation and the openness of the Treasury Board Secretariat to have interaction in dialogue with stakeholders,[xxxvi] permits for a coverage hole such because the one I’ve recognized to be addressed faster than if it have been present in legislative considerations.

Whereas the current silence across the position of people within the DADM is disconcerting, it’s in no way to late so as to add human creativity and criticalness, in a corrective position, to this policy-design loop.

Appendix: Crootof’s 9 Roles

| Position | Definition |

| A. Corrective Roles | “Enhance system efficiency” (Crootof et al. at 473) for the needs of accuracy (Crootof et al. at 475)

Three varieties: 1) Error Correction – repair algorithmic errors (Crootof et al. at 475-476) 2) Situational Correction – “enhance system’s outputs by tailoring algorithm’s suggestion primarily based on population-level knowledge to particular person circumstances” (Crootof et al. at 467); deploy ideas of fairness, when particularities of human expertise outrun our (or on this case, machine’s capacity) to make basic guidelines. (Crootof et al. at 477) 3) Bias Correction – “determine and counteract algorithmic biases” (Crootof et al. at 477) |

| B. Resilience[xxxvii] Roles | “act as a failure mode or alternatively cease the system from working below an emergency” (Crootof et al. at 473)

“Appearing as a backstop when automated system malfunctions or breaks down” (Crootof et al. at 478) |

| C. Justificatory Roles | “enhance system’s legitimacy by offering reasoning for selections” (Crootof et al. at 473)

“decide seem extra reliable, no matter whether or not or not they supply correct or salubrious justifications” (Crootof et al. at 479) |

| D. Dignitary Roles | “shield the dignity of the people affected by the choice” (Crootof et al. at 473)

Fight and keep away from objectification to alleviate dignitary considerations (Crootof et al. at 481) |

| E. Accountability Roles | “allocate legal responsibility of censure” (Crootof et al. at 473-474)

“guarantee somebody is legally liable, morally accountable, or in any other case accountable for a system’s selections.” (Crootof et al. at 482) |

| F. Stand-In Roles | “act as proof that one thing has been completed or stand in for people and human values” (Crootof et al. at 474)

“demonstrating that, simply in case of one thing improper with automation, one thing has been completed” (Crootof et al. at 484) |

| G. Friction Roles | “decelerate the tempo of automated decision-making” (Crootof et al. at 474)

“make them extra idiosyncratic and fewer interoperable” (Crootof et al. at 484)

|

| H. “Heat Physique” Roles | “protect human jobs” (Crootof et al. at 474)

augmented slightly than synthetic intelligence – significance of holding people concerned in processes (Crootof et al. at 486) “Prioritizes the price of particular person people within the loop, slightly than the people on which the algorithmic system acts” (Crootof et al. at 486) |

| I. Interface Roles | “hyperlink the programs to human customers” (Crootof et al. at 474)

“serving to customers work together with an algorithmic system” (Crootof et al. at 487)

“Making system extra intuitive for the outcomes extra palatable” (Crootof et al. at 487) |

References

[i] The writer want to thank the useful reviewers for his or her suggestions and feedback. This closing Op-Ed model was revised for written expression and readability, so as to add a extra specific and concrete suggestion for add people into the loop into the Canadian Algorithmic Impression Evaluation (“AIA”) regime, and to debate additional AIA literature on each Canadian governance and international finest practices. As a result of phrase depend limitations, a lot of this extra dialogue happens within the footnotes. Moreover, with time constraints, I used to be unable to go absolutely into one reviewer’s questions to have a look at different jurisdictions for his or her AIAs however have famous this as a future educational analysis subject. I do briefly reference a U.S. AI Governance coverage that was launched on 28 March 2024 (a day earlier than the paper’s deadline) as a degree of reference for my Op-Ed’s core suggestion.

[ii] Rebecca Crootof, Margot Kaminski & W. Worth, “People within the Loop” (2023) 76:2 Vanderbilt Legislation Evaluate 429 Accessed on-line: <https://cdn.vanderbilt.edu/vu-wordpress-0/wp-content/uploads/websites/278/2023/03/19121604/People-in-the-Loop.pdf>. See: Crootof et al. at 487 for abstract of this argument.

[iii] Treasury Board Secretariat, Directive on Automated Determination-Making (Ottawa: Treasury Board Secretariat, 2019) Accessed on-line: <https://www.tbs-sct.canada.ca/pol/doc-eng.aspx?id=32592> (Final modified: 25 April 2023)

[iv] Therese Enarsson, Lena Enqvist, and Markus Naarttijärvi (2022) “Approaching the human within the loop – authorized views on hybrid human/algorithmic decision-making in three contexts”, Data and Communications Expertise Legislation, 31:1, 123-153, DOI: 10.1080/13600834.2021.1958860 at 126; Crootof et al. at 434.

[v] Crootof et al. at 440. They focus on that this definition captures programs the place people apply discretion of their use algorithmic programs to succeed in a selected resolution, the place particular person and algorithmic programs cross off or carry out duties in live performance, and arguably even when a system requires a human overview of an automatic resolution.

[vi] Crootof et al. at 441. This is only one instance of the contrasting approaches in defining people within the loop, Enarsson et al. highlights that in a human within the loop system there may be functionality for human intervention in each resolution cycle of the system, and in a human off the loop system, people intervene by design and monitoring of the system. In addition they outline a 3rd kind, HIC programs or meta-autonomy, the place human train management and oversight to implement an in any other case absolutely automated system. Think about additionally, ‘human intervention’ and ‘people within the loop’ are the phrases utilized by the Authorities’s DADM however not outlined as such.

[vii] Crootof et al. at 445.

[viii] Crootof et al. at 444.

[ix] Crootof et al at 444.

[x] Crootof et al. at 446-459. They supply examples of the place the identical curiosity akin to regulatory arbitrage may very well be fostered in some instances (e.g. permitting a human-reviewed medical software program program to flee increased regulatory necessities), however in different circumstances discouraged (for instance, for Nationwide Safety Company minimizing human oversight of surveilled supplies to keep away from it classifying as a search.

[xi] Crootof et al. at 437.

[xii] Crootof et al. at 467.

[xiii] Crootof et al. at 473.

[xiv] Crootof et al. at 473-474.

[xv] Crootof et al. at 473.

[xvi] Crootof et al. at 487.

[xvii] Crootof et al. at 492.

[xviii] The ultimate EU AI Act at article 14 seems to include the identical dialogue of human oversight in article 14 as was mentioned and critiqued by Crootof together with the separation of consumer and supplier human roles. See: EU AI Act, Accessed on-line: <https://artificialintelligenceact.eu/article/14/>

[xix] Crootof et al. at 503-504.

[xx] “The time period gentle legislation is used to indicate agreements, ideas and declarations that aren’t legally binding.” See: European Middle for Constitutional and Human Rights, “Definition: Exhausting legislation/gentle legislation”, Accessed on-line: <https://www.ecchr.eu/en/glossary/hard-law-soft-law/>

[xxi] Particularly, the DADM mandates {that a} Canadian Authorities company full and launch the ultimate outcomes of an AIA previous to producing any automated resolution system. These AIAs additionally should be reviewed and up to date because the performance and scope of the ADM system modifications.

[xxii] DADM at Appendix C.

[xxiii] DADM at Appendix B.

[xxiv] In response to one in all my reviewers asking about whether or not there may be an company whose position is/needs to be to regulate declarations that don’t play their half, in Canada the Treasury Board Secretariat (“TBS”) is the company that administers the AI. In principle, it’s presupposed to play this position. Nevertheless, I’ve obtained a number of copies of e mail correspondence by Entry to Data requests pertaining to AIA approval the place opinions and suggestions occurred merely over the course of some exchanged emails.

[xxv]In response to a reviewer’s query, my very argument is that the AIA’s construction permits entities to keep away from having to debate the position of people within the loop as to this point they’ve all self-assessed tasks as medium-risk on the highest stage, subsequently permitting tasks to not even require a human within the loop, not to mention focus on the roles of the people within the loop. The key unfavourable influence I see within the silence on the roles is each a scarcity of transparency and accountability, however the chance that people are considered merely as a justificatory safeguard for ADMs with out additional interrogation. I agree with Crootof et al. that there may very well be different roles not contemplated within the record of 9, however that this record does present a place to begin for debate and dialogue between the policymaker drafting the AIA and the TBS/peer reviewer/reviewing public.

[xxvi] Attention-grabbing to distinction the EU AI Act, “Annex 3 – Annex III: Excessive-Threat AI Techniques Referred to in Article 6(2)” Accessed on-line: <https://artificialintelligenceact.eu/annex/3/> classifies migration administration as high-risk at 7(d).

[xxvii] See: Canada, Treasury Board Secretariat, Algorithmic Impression Evaluation device (Ottawa: modified 25 April 2023). Printed AIAs might be accessed right here: <https://search.open.canada.ca/opendata/?type=metadata_modified+desc&search_text=Algorithmic+Impression+Evaluation&web page=1>

[xxviii] Authorities of Canada, Open Authorities, “Algorithmic Impression Evaluation – Superior Analytics Triage of Abroad Short-term Resident Visa Functions – AIA for the Superior Analytics Triage of Abroad Short-term Resident Visa Functions (English).” 21 January 2022. Accessed on-line: <https://open.canada.ca/knowledge/dataset/6cba99b1-ea2c-4f8a-b954-3843ecd3a7f0/useful resource/9f4dea84-e7ca-47ae-8b14-0fe2becfe6db/obtain/trv-aia-en-may-2022.pdf> (Final Modified: 29 November 2023)

[xxix] Crootof et al. at 482. It’s famous that solely not too long ago has there been the acknowledgment of automation bias in a publicly posted doc. See: Authorities of Canada, Open Authorities, “Worldwide Expertise Canada Work Allow Eligibility Mannequin – Peer Evaluate of the Worldwide Expertise Canada Work Allow Eligibility Mannequin – Government Abstract (English)“ 9 February 2024. Accessed on-line: <https://open.canada.ca/knowledge/dataset/b4a417f7-5040-4328-9863-bb8bbb8568c3/useful resource/e8f6ca6b-c2ca-42c7-97d8-394fe7663382/obtain/peer-review-of-the-international-experience-canada-work-permit-eligibility-model-executive-summa.pdf>

[xxx] This can be a finest apply recognized by Reisman et al. in “Algorithmic Impression Assessments: A Sensible Framework for Public Company Accountability” AI Now, April 2018 at 9-10 Accessed on-line: <https://ainowinstitute.org/publication/algorithmic-impact-assessments-report-2>. The DADM and AIA at present doesn’t have a public remark course of.

[xxxi] Crootof et al at 454-455.

[xxxii] Per the DADM at 1.3, there’s a mandated two-year overview interval. The DADM accomplished its third overview in April 2023.

[xxxiii]Shalanda D. Younger, Memorandum for the Heads of Government Division and Businesses, “Topic: Advancing Governance, Innovation, and Threat administration for Company Use of Synthetic Intelligence” Government Workplace of the President of america – Workplace of Administration and Funds, 28 March 2024 at 4-5, 18, 26. Accessed on-line: <https://www.whitehouse.gov/wp-content/uploads/2024/03/M-24-10-Advancing-Governance-Innovation-and-Threat-Administration-for-Company-Use-of-Synthetic-Intelligence.pdf>

[xxxiv] The Presidential Memorandum seems extra broadly geared toward the usage of AI and AI Governance by U.S. businesses and particularly as a part of an ADM approval processes. It does increase the query of whether or not dialogue of people within the loop or AI Governance needs to be constructed into the DADM and every AI or as a substitute be a standalone coverage that applies to all Authorities AI tasks. My suggestion can be for each a broader directive and necessities for AIAs to particularly focus on compliance close to the broader directive.

[xxxv] The overview interval was initially set within the DADM at six months however not too long ago expanded to two-years. I might argue that with the AIA nonetheless containing many gaps, a six-month overview would possibly nonetheless be useful. See: DADM third Evaluate Abstract, One Pager, Fall 2022, Accessed on-line: <https://wiki.gccollab.ca/photos/2/28/DADM_3rd_Review_Summary_percent28one-pagerpercent29_percent28ENpercent29.pdf?utm_source=Nutshell&utm_campaign=Next_Steps_on_TBSs_Consultation_on_the_Directive_on_Automated_Decision_Making&utm_medium=e mail&utm_content=Council_Debrief__July_2021>

[xxxvi] I’ve been in frequent on-line dialogue with Director of Knowledge and Synthetic Intelligence, Treasury Board of Canada Secretariat, Benoit Deshaies, who has usually responded to each my public tweets and personal messages with questions concerning the AIA’s course of. See e.g.: a current Twitter/X alternate https://twitter.com/MrDeshaies/standing/1769703302730596832

[xxxvii] Crootof et al. at 478 defines this as “complicated system to resist failure by minimizing the harms from dangerous outcomes.”